_873431.png)

[Admissions]

Admissions

See the RubricEvaluation of undergraduate programs relies on four sets of data:

- Mean university SAT/ACT scores sourced from the Integrated Postsecondary Education Data System (IPEDS)

- Barron's selectivity ratings (in the absence of data on SAT/ACT scores) sourced from Barron's Profiles of American Colleges

- Minimum GPA and admissions test requirements sourced from the undergraduate catalog or admissions requirements webpage

- Mean GPA for the most recently admitted cohort of teacher candidates provided by the program

- Minimum GPA, admissions test, and audition requirements sourced from the graduate catalog or admissions requirements webpage

- Mean GPA for the most recently admitted cohort of teacher candidates provided by the program

After the mean SAT/ACT data and Barron's ratings are collected for each institution, a team of analysts use course catalogs and program admissions webpages to determine the GPA required for program entry.

For undergraduate programs, which typically admit teacher candidates in their junior year, we identify the minimally acceptable GPA to be admitted to the program. In instances where a program sets both unconditional and conditional GPA thresholds, separate measures for different segments of undergraduate coursework, or other variations where there are multiple minimums, we use the value that applies to all coursework and serves as the floor for all admitted candidates. Acceptable thresholds for graduate and non-traditional programs can be either a candidate's overall GPA or that of upper division coursework only.

Both undergraduate and graduate programs are invited to share the certified GPA of their most recent cohort of admitted students. When provided, these figures are considered on a separate, more demanding scale from the minimum GPA values because, while this mean value is a representation of the strength of the admitted candidates, it does not ensure that all candidates possess a GPA above the program minimum.

Once these data are collected, as detailed under the Scoring Rubric, undergraduate program grades are determined by the highest satisfied criteria, while graduate and non-traditional program grades are derived from the sum of the GPA and candidate screening measures.

[ProgramDiversity]

Program Diversity

See the RubricEvaluation relies on five data inputs:

- Teacher preparation program enrollment demographics sourced from Title II National Teacher Preparation Data

- State teacher workforce demographics sourced from U.S. Department of Education, National Center for Education Statistics, National Teacher and Principal Survey

- State population demographics sourced from the U.S. Census Bureau database

- Core-based statistical area (CBSA) demographics sourced from the U.S. Census Bureau database

- County demographics sourced from the U.S. Census Bureau database

Methodology in Brief

Analysis begins by identifying the IPEDS number, state, and county for each institution. Demographics data are then linked to institutions using one or more of these identifiers.

Teacher preparation program data

Title II National Teacher Preparation Data reports enrollment data obtained from institutions under the following demographic categories:

- Total Enrollment

- Hispanic Enrollment

- Indian Enrollment

- Asian Enrollment

- Black Enrollment

- Islander Enrollment

- White Enrollment

- Multi-racial Enrollment

This process is completed for the three most recent years of Title II releases. The sum of the six non-White Enrollment categories across the three years of data are divided by the three-year Supported Sum to obtain the percentage of candidates of color we report for each institution.

State teacher workforce data

The demographic composition of the state teacher workforce is obtained through the U.S. Department of Education, National Center for Education Statistics, National Teacher and Principal Survey (formerly the School and Staffing Survey). In instances where state-level data is not available through the survey, demographic information is sourced from documentation published by the state.

Local demographics data

Under the standard, institutions are compared against their core-based statistical area (CBSA), which are metropolitan and micropolitan areas determined by the U.S. Census Bureau. CBSA designations are obtained through a lookup using the institution's county. For institutions outside of a CBSA, we use the demographic data for the residing county. Both sets of data are obtained from the U.S. Census Bureau database.

State demographic data

Used only as part of the State Outlier Comparison calculation, state-level demographics are obtained from the U.S. Census Bureau database.

Calculations under the standard

As explained in full under the Scoring Rubric, our analysis is based on a comparison between program enrollment and, separately, the state teacher workforce and local area demographics (CBSA). Programs receive full credit if they meet (with a 0.5 percentage point margin of error) the diversity of the comparison population. Programs receive partial credit if they approach (within 5.0 percentage points) the diversity of the comparison population.

All figures are collected using one decimal place. Whole numbers are used in the public presentation of the data for simplicity.

[EarlyReading]

Early Reading

See the RubricEvaluation relies on two sources of data:

- Syllabi for all required courses within the program that address literacy instruction

- All required textbooks for each required literacy course

A team of analysts use course catalogs to determine the required coursework for each elementary program we are evaluating. Analysts then read course titles and descriptions to pinpoint courses that address reading instruction. Textbook information is gathered through syllabi and university bookstores.

A separate team of expert reading analysts -- all professors and practitioners with advanced degrees and deep knowledge of how children learn to read -- evaluate reading syllabi and textbooks using a detailed scoring protocol.

Fifteen percent of syllabi are randomly selected for a second evaluation to assess scoring variances. Each course is analyzed for its coverage of each of the five components of early reading instruction, as identified by the National Reading Panel (2000): phonemic awareness, phonics, fluency, vocabulary, and reading comprehension. Course analysis focuses on three main elements:

- Use of course time to address each component, as specified by the lecture schedule.

- Whether students are required to demonstrate knowledge of individual components through assessments, assignments, or instructional practice

- If the assigned text or texts accurately present the components of reading instruction. Ratings of reviewed reading textbooks are provided here.

- The reviewer ascertains if the text can be used either as a 'core' text (covering all five of the components as well as analyzing how the text approaches assessment and strategies for struggling readers), or if the text is designed only to teach one or a combination of the components, but not all.

- The reviewer determines if the content defines and presents each component in light of the science, shedding old unproven practice and advancing a depth of knowledge not only about how students learn to read, but specifically how to teach students to read -- not just guide, encourage, or support.

- References are perused for primary sources, researchers, and trusted peer-reviewed journals that present the consensus around the science of reading.

Each of the five component is assessed separately within each course. Points awarded for use of course time, demonstration of knowledge, and text coverage are combined to create five separate component scores (phonemic awareness, phonics, fluency, vocabulary, and reading comprehension) for each course. If a program includes multiple reading courses, the program score for each component is determined by the highest course score for that component. The five program-level component scores are used to determine the overall grade.

Scoring with less information

Due to the critical importance of reading instruction, NCTQ developed a means of evaluating elementary programs on this standard in cases where course details are missing from submitted material or where we could not obtain all reading syllabi.

This process relies on two key sources of data:

- The syllabus for at least one course focused on foundational literacy. The syllabi for peripheral courses that may touch on literacy instruction, but are not core foundational literacy courses, are never substituted.

- The assigned textbooks for all required literacy coursework. Where this information cannot be sourced from a syllabus, we identify the required textbooks using the institution's bookstore.

Non-traditional programs

Our analysis of non-traditional programs only considers the coursework that is required before candidates become teachers of record. Reading instruction is simply too important for teachers to be learning while on the job. To account for the limited time-frame to complete such coursework, we additionally consider the requirement of a passing score on a reading-specific licensing test prior to entering the classroom.

[ElementaryMath]

Elementary Math

Coming Soon[BuildingKnowledge]

Building Knowledge

Coming Soon[ClinicalPractice]

Clinical Practice

See the RubricEvaluation is based on information from a variety of sources, primarily the following:

- Student teaching handbooks, student teaching syllabi, and other documents that describe program policies and practices during student teaching, residency, or the first year in the classroom (for non-traditional programs where participants are teachers of record)

- Applications filled out by prospective mentor/cooperating teachers

- Forms returned to programs by school districts with information about prospective mentor/cooperating teachers

- Correspondence between programs and school districts during the process of selecting mentor/cooperating teachers

- Contracts between programs and the school districts where program participants are placed for student teaching, residency, or the first year in the classroom (for non-traditional programs where participants are teachers of record)

A team of analysts examined the materials collected for each program. Twenty percent of programs are randomly selected for a second evaluation to assess scoring variances.

Traditional programs were formerly evaluated under the Student Teaching standard, and non-traditional programs were evaluated under the Supervised Practice Standard. The Clinical Practice standard combines these two standards.

Length and Intensity of Student Teaching

For the purpose of this evaluation, student teaching is defined as an extended experience in which future teachers spend time in the classroom of an experienced teacher.

When identifying the length of student teaching, analysts look for the number of weeks during the final year of the program when teacher candidates spend the equivalent of at least four days per week in the classroom of an experienced teacher.

Analysts also track the number of weeks during which student teachers are in charge of instruction for the whole class. At a minimum, this means that they take the lead on planning for the full day of classes. However, this does not affect a program's overall grade.

Written Feedback Based on Observations

Observations are counted if they occur during the final semester of student teaching, residency, or the equivalent; or, for non-traditional programs, if they occur during the first twelve weeks in which participants are teachers of record. Only observations conducted by program supervisors and accompanied by written feedback are relevant.

If a program does not require that written feedback is given on individual observations, analysts count the number of times that feedback based on those observations is given through summative evaluations or in other written forms. However, feedback from the same observation is not counted twice.

Selection of Cooperating/Mentor Teachers

Recognizing that selection of mentor/cooperating teachers is a cooperative process involving both programs and their school district partners, we look to see that programs play an active role in the process by collecting information, beyond years of experience or area of certification, that directly reveals potential cooperating teachers' abilities, and allows programs to choose among nominees based on this information. This can be done in a variety of ways, for example by asking cooperating teachers to fill out an application or requesting that principals comment on the mentorship and instructional skills of teachers they nominate. It is especially important to screen new cooperating/mentor teachers who do not have a previous history with the program.

Additional credit is given to programs that screen mentor/cooperating teachers for demonstrated mentorship ability and/or strong instructional skills, as shown by the teacher's positive impact on students' learning.

Clear requirements for mentor/cooperating teachers can help to guide the selection process, so analysts also track whether program criteria for mentor/cooperating teachers state that they should be both strong mentors of adults and highly effective instructors. However, this is not factored into overall grades.

[ClassroomManagement]

Classroom Management

See the RubricEvaluation is based on the following sources of information:

- Observation and evaluation forms used by program supervisors or mentor/cooperating teachers to evaluate student teachers, residents, or initial licensure candidates during their first year in the classroom, (for programs in which participants are teachers of record).

- Any accompanying rubrics or guides that explain how the evaluation forms are used

A team of analysts search clinical practice handbooks, program websites, and related sources to identify which instruments are used by each program to evaluate student teachers, residents, or initial licensure candidates during their first year in the classroom, (for programs in which participants are teachers of record).

Observation and evaluation forms are both considered under the standard. These instruments are relevant if they are used by program supervisors or mentor/cooperating teachers. Programs are evaluated only when analysts are able to identify and collect the full set of relevant forms. When this is not possible, the program is not scored. Twenty percent of programs are randomly selected for a second evaluation to assess scoring variances.

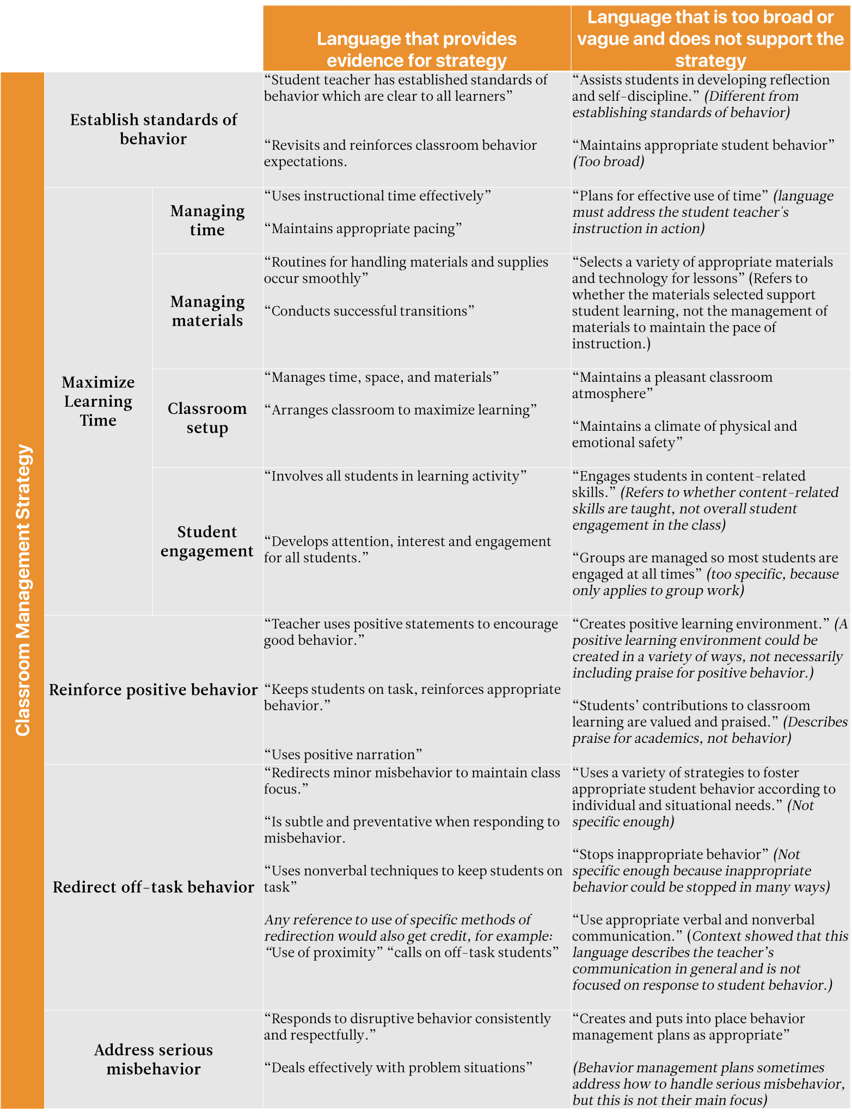

Grades are based on whether observation and evaluation forms specifically refer to the five strategies identified by strong research as being useful for all students. These strategies are:

- Establishing rules and routines that set expectations for behavior;

- Maximizing learning time by a) promoting student engagement and b) managing time, c) managing class materials, and d) managing the physical setup of the classroom.

- Reinforcing positive behavior by using meaningful praise and other forms of positive reinforcement;

- Redirecting off-task behavior by using unobtrusive means that do not interrupt instruction and that prevent and manage such behavior;

- Addressing serious misbehavior with consistent, appropriate consequences.

The examples below, taken from actual programs that we evaluated, show language that would and would not receive credit for each of the five strategies.